Imagine you’re in a self-driving car, headed to the grocery store. As you look out the window, you spot a school bus in the oncoming traffic. Out of nowhere, a biker swerves into your lane. Your car can do one of two things: hit the biker, or steer into the school bus.

Now imagine you’re one of the engineers who designed that self-driving car. You’re responsible for the small part of the car’s programming that determines how it will respond when unexpected people or objects swerve into its lane. Whose safety—whose life—should the car prioritize? How would you code what the car should do?

This thought experiment (loosely based on the so-called trolley problem) is growing less hypothetical by the day. Designing emerging technologies, including self-driving cars, creates a thicket of moral dilemmas that most engineers and entrepreneurs are not trained to negotiate.

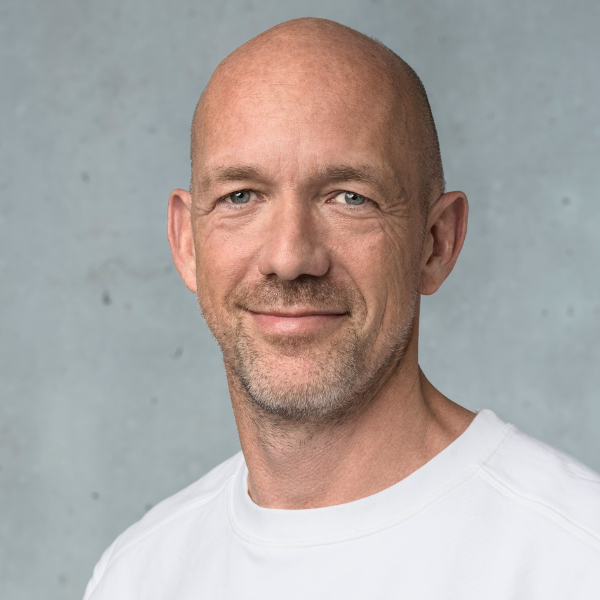

What’s needed in tech and related fields, philosopher Jason Millar believes, is a greater ability to recognize, articulate and navigate the moral challenges posed by technology—what he describes as ethics capacity-building. Millar, a postdoctoral scholar at Stanford’s McCoy Family Center for Ethics in Society, has not only studied emerging philosophical questions in engineering, robotics, and artificial intelligence—he faced those issues during his years as a practicing engineer.

The trolley problem is an especially dramatic example of a challenge that exists across the technology sector: innovation is happening so rapidly that it’s flying past serious ethical questions without fully addressing them, or recognizing them as ethical questions at all. Russian propaganda designed to undermine the US presidential election flourished unchecked on Facebook and Twitter. Waze users are reshaping traffic flow in communities across the US, dramatically altering the character of once-quiet neighborhoods.

The teams at Facebook, Twitter and Waze likely never imagined themselves as arbiters of free speech or urban design. But, in Millar’s view, that’s part of problem: whether they think they are or not, engineers have become de facto policymakers, embedding social values and preferences into the products they design. Millar argues engineers need to get better—and soon—at understanding the downstream social effects of the technology they’re creating.

Whose safety—whose life—should the car prioritize? How would you code what the car should do?

The trolley problem and vexing issues of privacy and free expression will never be easy to solve. But by gaining more awareness of the implications of their choices, Millar argues engineers and entrepreneurs will actually design better technology.

Here are four principles, based on Millar’s work, which can help entrepreneurs and engineers seeking to incorporate ethical thinking into their work—no philosophy degree required.

Start by viewing ethics as essential to good design

Ethics and innovation aren’t at war, Millar emphasizes. “When I talk about thinking about design as an ethical challenge, as well as a technical challenge, I’m thinking about avoiding social failures,” he says—such as Facebook’s role in the spread of Russian propaganda, and Uber’s use of surge-pricing during natural disasters.

Incorporating ethics into design isn’t just about making lawyers happy. When entrepreneurs probe more deeply into the social and moral implications of their products, it can help their products thrive—and allow them to avoid costly or embarrassing missteps that might alienate them from potential customers.

“If you avoid those social failures, you just have a better chance of your technology succeeding. Your business model has to be aligned with the social realities and the ethical realities of your user base,” he says.

Incorporate ethics into the design room

Millar has seen the power of ethical thinking to address design problems in ethics workshops he’s developed, with Stanford Professor Chris Gerdes, at the Center for Automotive Research at Stanford. The workshops focus on the seemingly straightforward question of how self-driving cars should navigate pedestrian intersections. They introduce the engineers to the many stakeholders in that car-pedestrian interaction—car manufacturers, government regulators, municipal governments responsible for signage, vulnerable road users, such as individuals with disabilities, and so on—and asks them to imagine what’s important to each of those stakeholders.

Gradually, the engineers stop focusing solely on technical issues (when should the car slow down, and by how much?) and begin grappling with the competing human values at play. What’s ideal for one user might not be for another. A good design solution, the engineers often begin to realize, is one that balances the concerns and priorities of a broader group of stakeholders.

“The value in this work is just in allowing [engineers] to reframe the problem in terms of people and values in order to develop more satisfactory design options,” Millar says.

Borrow from existing models, such as the health care industry

Can an entire industry get better at handling vexing ethical issues? Millar thinks it’s possible—because health care already did.

Fifty years ago, behavior unthinkable to us today, such as lying to patients (ostensibly for their own good) or not receiving patient consent before administering treatment, were a routine part of Western medicine.

Today, however, the practice of informed consent is enshrined in law and medical ethics and clinicians are much more aware when they’re dealing with ethical issues. An entire field devoted to ethical questions in biology and medicine emerged. Today, bioethicists sit on the faculties of medical schools across the country, and clinical ethicists provide valuable services to health care providers and patients on ethical-decision making.

Many of these ideas could be adapted to the technology sector and incorporated into the engineering workflow. Companies could convene groups like hospital ethics boards or institutional review boards to review their new products and features, and flag potential issues before release. And just as bioethicists work alongside physicians and researchers, staff ethicists could counsel engineers and consult on especially complex projects. Workshops like the ones Millar leads at CARS might give engineers new tools to identify ethical challenges in their work.

“Your business model has to be aligned with the social realities and the ethical realities of your user base.”

Jason Millar

Make ethics everyone’s job

When Millar was training to be an engineer, he got a typical ethics education, mostly aimed at preventing physical harm: don’t take on projects for which you aren’t qualified. Double-check everything. Never sign off on a design you haven’t reviewed carefully.

These were good lessons, but they didn’t prepare Millar for the quandaries he faced as a working engineer. While doing chip design for a telecommunications company, he began getting requests to build in the technical capabilities that would allow the National Security Agency to spy on consumers.

Features like the ones he was asked to build “have ethical implications, but those implications aren’t the type that I was trained to pay attention to,” Millar says. “It wasn’t a business ethics question. It was more of a technology ethics question: should we be participating in developing the kinds of technologies that do X, Y or Z?” Questions like these ultimately drove Millar’s decision to return to school and study ethics.

Though Millar doesn’t see a one-size-fits-all solution, he does suggest that engineers begin to view design ethics as part of their job. Devote more time to thinking about what you’ve been asked to make, and why. Read widely, and don’t shy away from academic literature that might be relevant to your work. If your company doesn’t have an ethics board or an ethicist on staff, ask for them—and for additional ethics training. If you think the feature you’ve been asked to build has social consequences that haven’t been discussed, tell your manager and colleagues. “Young engineers doing that—that’s a good thing,” Millar says.

Changing the culture of the industry isn’t something individual engineers can or should do alone. Millar believes that many stakeholders, from policy makers to philosophers, have a role in making technology more responsive to ethical concerns.

“It’s just like when you’re doing mechanical design,” Millar reflects. “It’s not just that we need better gear design, or better materials—we need all of that stuff, and then we need a way of thinking about how to make the gears, and we need policies in place to make sure that when we’re making gears, we’re using the right types of materials.

“Designing technology is complex, but we do it all the time. Confronting ethics is just a matter of adding new things to the toolkit—new processes and new ways of thinking about problems.”